Бесплатный фрагмент - Artificial intelligence. Freefall

Introduction

2023 was the third and so far, hottest summer of artificial intelligence. But what should developers and businesses do? Is it worth cutting back on people and fully trusting the new technology? Or is it all alluvial, and we are waiting for a new AI winter? And how will AI be combined with classic management tools and practices, managing projects, products, and business processes? Does AI break all the rules of the game?

We will delve into these questions, and in the process of thinking and analyzing various factors, I will try to find answers to them.

I will say right away, there will be a minimum of technical information here. And even more so, there may be technical inaccuracies. But this book is not about what type of neural networks to choose for solving a particular problem. I offer you an analyst’s, manager’s, and entrepreneur’s perspective on what’s happening here and now, what to expect globally in the near future, and what to prepare for.

Who can use this book and what can it be useful for?

— Owner and top manager of am companies.

They will understand what AI is, how it works, where trends are going, and what to expect. In other words, they will be able to avoid the main mistake in digitalization — distorted expectations. This means, that they will be able to lead these areas, minimize costs, risks and deadlines.

— IT entrepreneur and founder of startups.

They will be able to understand where the industry is moving, what IT products and integrations should be developed, and what you will have to face in practice.

— IT specialist.

They will be able to look at the issue of development not only from a technical point of view, but also from an economic, and managerial point of view. They will understand why up to 90% of AI products remain unclaimed. Perhaps this will help them in their career development.

— Ordinary people.

They will understand what the future holds for them and whether they should be afraid that AI will replace them. Spoiler alert: creative professionals are under threat.

Our journey will go through 3 major parts.

— First, we will dive in and understand, what AI is, how it all started, what problems and opportunities it has, what trends are developing, and what the future holds for us.

— Next, we’ll look at the synergy of AI with systems-based tools. How they affect each other, in what scenarios AI will be applied in IT solutions.

— At the end, we will go through almost all examples and give recommendations.

In my favorite tradition, the book will contain QR-codes (for the printed version) and active hyperlinks (for the electronic version) to useful articles and interesting materials.

As for the AI itself, it was used in the writing of the book to demonstrate its work, and these fragments are highlighted, as well as to search for ideas. The level of technology is still not enough to take materials and insert them into a book. It is also important to note that my main language is Russian, so I will make all requests to the AI in Russian.

I would like to conclude my preface by thanking the people who helped me:

— to my wife Alisa and son Valery;

— to my parents;

— to my coach Evgeny Bazhov;

— my team, especially Alexander Peremyshlin;

— to my partners and clients who have given me time to think, especially Kirill Neyelov;

— to my project colleagues.

P.S. This book has been translated from my native language using artificial intelligence. Therefore, there may be grammatical and other errors

Part 1. Introduction to Artificial Intelligence

Chapter 1. Introduction to AI

What is artificial intelligence?

To begin with, there is no such definition. Let’s ask the AI itself, or rather several large language models (LLM — large language model). Below are some answers with the original spelling.

GPT4: “Artificial intelligence (AI) is the ability of artificial intelligent systems to perform creative functions that are traditionally considered the prerogative of humans. It is related to the science and technology of creating intelligent machines, especially intelligent computer programs. It’s important to note that AI isn’t necessarily limited to biologically plausible methods.

Existing intelligent systems have rather narrow application areas. For example, programs that can beat a human at chess usually can’t answer questions.

In general, artificial intelligence allows computer systems to mimic human cognitive abilities, such as learning and problem solving.

This is an important area in modern computer science and data science, and its application spans many areas, from finance and medicine to entertainment and transportation”.”

YandexGPT 2: “Artificial Intelligence — AI) is a set of computer science, mathematics, biology, and psychology techniques that develop systems that can perform tasks that usually require human intelligence. Their main task is to model the human mind.”

YandexGPT 3 Pro: “Artificial intelligence (AI) is the ability of intelligent systems to perform creative functions that are traditionally considered the prerogative of humans. It is the science and technology of creating intelligent machines, especially computer programs that can perform tasks that require human intelligence, such as solving complex problems, learning from experience, understanding natural language, and being able to adapt to new situations. AI is related to the use of computers to understand human intelligence, but is not limited to biologically plausible methods.”

GigaChat: “Artificial intelligence (AI) is the ability of intelligent systems to perform creative functions that are traditionally considered the prerogative of humans. It is the science and technology of creating intelligent machines, especially intelligent computer programs. Artificial intelligence is related to the similar task of using computers to understand human intelligence, but is not necessarily limited to biologically plausible methods. Today’s smart systems have rather narrow areas of application. Artificial intelligence was founded as an academic discipline in 1956 and has generated several waves of optimism in the decades since.”

But as a human, the simplest and most understandable definition is closer: AI is any mathematical method that allows you to imitate human or other intelligence from natural nature.

In other words, AI is a huge number of solutions, including primitive mathematical algorithms and expert systems based on rules.

And although this direction was born somewhere in the 50s of the 20th centuries, we are primarily interested in what we understand by this today, in the early 2020s. And tut has three main areas.

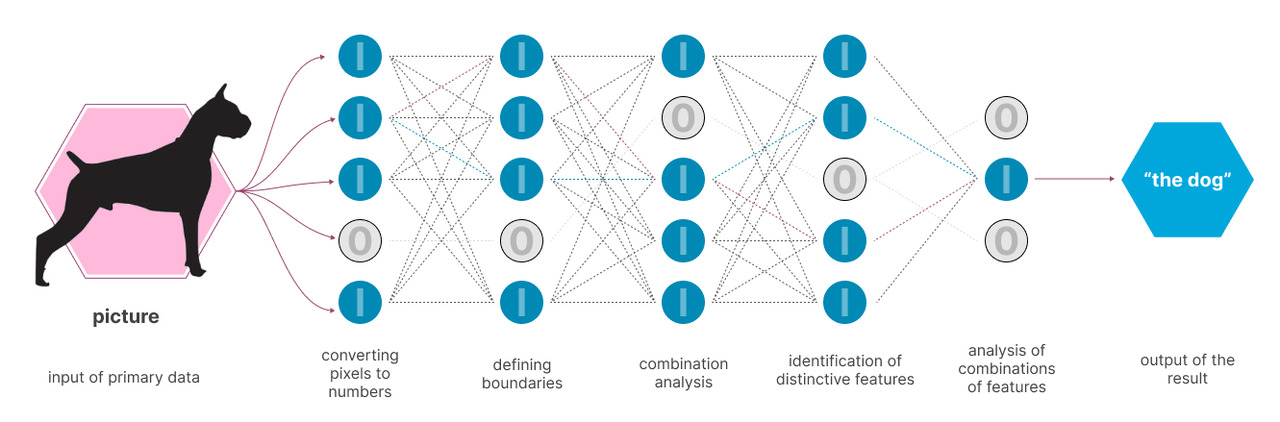

1. Neural networks — mathematical models created in the likeness of neural connections of the brain of living beings. Actually, the human brain is a super-complex neural network, the key feature of which is that our neurons are not limited to “on / off” states, but have many more parameters that cannot yet be digitized and fully applied.

2. Machine learning — ML) — statistical methods that allow computers to improve the quality of the task performed with the accumulation of experience and in the process of retraining. This direction has been known since the 1980s.

3. Deep learning (DL) is not only machine learning with the help of a person who says what is true and what is not (as we often raise children, this is called reinforcement learning), but also self-learning of systems (learning without reinforcement, without human participation). This is the simultaneous use of various training and data analysis techniques. This direction has been developing since the 2010s and is considered the most promising for solving creative tasks, and those tasks where the person himself does not understand clear relationships. But here we can’t predict at all what conclusions and results the neural network will come to. Here you can manipulate what data we “feed” to the AI model at the input.

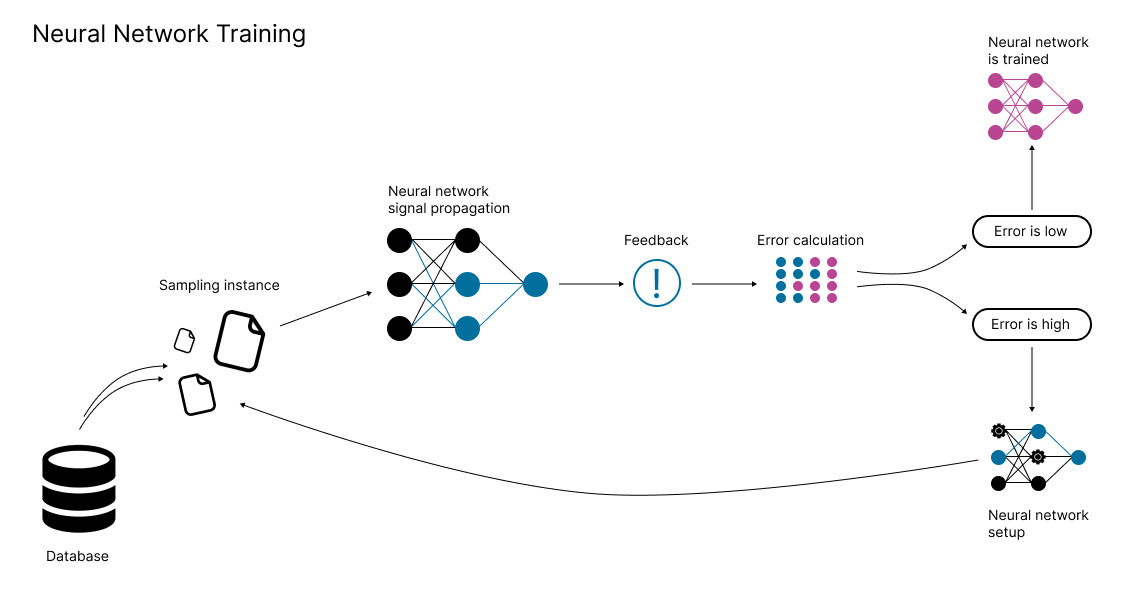

How are AI models trained?

Currently, most AI-models are trained with reinforcement: a person sets input information, the neural network returns an answer, and then the person tells it whether it answered correctly or not. And so on, time after time.

Similarly, the so-called “CAPTCHA” (CAPTCHA, Completely Automated Public Turing test to tell Computers and Humans Apart) work, that is, graphical security tests on websites that calculate who the user is: a person or a computer. This is when, for example, you are shown a picture divided into parts and asked to specify the areas where bicycles are depicted. Or they ask you to enter numbers or letters displayed in an intricate way on the generated image. In addition to the main task (the Turing test), this data is then used to train AI.

At the same time, there is also unsupervised learning, in which the system learns without human feedback. These are the most complex projects, but they also allow you to solve the most complex and creative tasks.

General features of current AI-based solutions

Fundamentally, all AI-based solutions at the current level of development have common problems.

— Amount of training data.

Neural networks need huge amounts of high-quality and marked-up data for training. If a human can learn to distinguish dogs from cats in a couple of examples, then AI needs thousands.

— Dependence on data quality.

Any inaccuracies in the source data strongly affect the final result.

— The ethical component.

There is no ethics for AI. Only math and problem completion. As a result, complex ethical issues arise. For example, why should I knock down autopilot in a desperate situation: an adult, a child, or a pensioner? There are countless similar disputes. For artificial intelligence, there is neither good, nor evil, just like the concept of “common sense”.

— Neural networks cannot evaluate data for reality and logic, and they are also prone to generating poor-quality content and AI hallucinations.

Neural networks simply collect data and do not analyze facts or their connectedness. They make a large number of mistakes, which leads to two problems.

The first is the degradation of search engines. AI created so much low-quality content that search engines (Google and others) began to degrade. Just because there is more low-quality content, it dominates. This is especially helpful for SEO-optimizers of sites that simply outline popular queries for promotion.

The second is the degradation of AI models. Generative models also use the Internet for “retraining”. As a result, people, using AI and not checking for it, fill the Internet with low-quality content themselves. And the AI starts using it. The result is a vicious circle that leads to more and more problems.

An article on this topic is also available by using the QR code and hyperlink.

Realizing the problem of generating the largest amount of disinformation content by AI, Google conducted a study on this topic. Scientists analyzed about two hundred media articles (from January 2023 to March 2024) about cases when artificial intelligence was used for other purposes. According to the results, most often AI is used to generate fake images of people and false evidence of something.

— The quality of “teachers”.

Almost all neural networks are taught by people: they form requests and give feedback. And there are many limitations here: who teaches you what, based on what data, and for what purpose?

— People’s readiness.

We should expect huge resistance from people whose work will be taken away by neural networks.

— Fear of the unknown.

Sooner or later, neural networks will become smarter than us. And people are afraid of this, which means that they will slow down development and impose numerous restrictions.

— Unpredictability.

Sometimes everything goes as planned, and sometimes (even if the neural network copes well with its task) even the creators struggle to understand how the algorithms work. The lack of predictability makes it extremely difficult to eliminate and correct errors in neural network algorithms. We are only learning to understand what we have created ourselves.

— Restriction by type of activity.

All AI for mid-2024 is weak (we’ll discuss this term in the next chapter). Currently, AI algorithms are good for performing targeted tasks, but they do not generalize their knowledge well. Unlike a human, an AI trained to play chess will not be able to play another similar game, such as checkers. In addition, even deep learning does a poor job of processing data that deviates from its training examples. To effectively use the same ChatGPT, you must initially be an expert in the industry and formulate a conscious and clear request.

— Costs of creation and operation.

It takes a lot of money to create neural networks. According to a report from Guosheng Securities, the cost of training a relatively primitive LLM GPT-3 LLM was about $ 1.4 million. For GPT-4, the amounts already go into the tens of millions of dollars.

If we take ChatGPT3 as an example, then only to process all requests from users, more 30than 30,000 NVIDIA A100 GPUs were needed. Electricity cost about $ 50,000 a day. It requires a team and resources (money, equipment) to ensure their “life”. You also need to take into account the cost of maintenance engineers.

Again, these are common drawbacks for all AI solutions. Later on, we will return to this topic several times and discuss these shortcomings in more practical examples.

Chapter 2: Weak, Strong, and Super-Strong AI

Now let’s talk about three concepts-weak, strong, and super-strong AI.

Weak AI

All that we are seeing now is a weak AI (ANI, Narrow AI). It can solve highly specialized tasks that it was originally designed for. For example, it can distinguish a dog from a cat, play chess, analyze videos and improve the quality of video / audio, advise on a subject area, and so on. But, for example, the strongest weakest AI for playing chess is absolutely useless for playing checkers. And AI for project management consulting is absolutely useless for planning equipment maintenance.

Strong and super — strong AI-what is it?

If the definition, of what AI, is confusing, then the term “strong AI” or “general AI” is even more complicated. Let’s start by using LLM services again. To do this, I made a request: “Give a transcript of the term AGI and its brief description.” Below I will give the answers of several LLMs in the original spelling.

YandexGPT 2: “AGI (Artificial General Intelligence) is a general-purpose artificial intelligence that can solve a variety of tasks typical of the human mind. AGI has the flexibility and adaptability of the human brain and can learn independently”.”

YandexGPT 3 Pro: “AGI (Artificial General Intelligence) is a concept for creating artificial intelligence that will be able to solve a wide range of tasks at the level of human intelligence. This is the next step in the development of artificial intelligence after highly specialized systems, such as speech recognition or computer vision systems.”

YandexGPT 4 Pro RC: “AGI (Artificial General Intelligence) is artificial general intelligence, which is a hypothetical form of AI that can understand and solve problems at the same level as humans. AGI can learn, adapt, and make decisions in various fields, such as science, art, and social interactions.

Unlike specialized AI systems that are designed to perform specific tasks, AGI strives for versatility and the ability to solve a wide range of problems. However, creating an AGI remains a complex task that requires significant research and development in machine learning, neural networks, and other technologies.”

Giga Chat: “AGI (Artificial General Intelligence) — general artificial intelligence. This is the concept of creating an intelligent machine that can solve any intellectual tasks in the same way as a human does, and perhaps better than him.”

ChatGPT4: “AGI stands for Artificial General Intelligence (Automated General Intelligence), which is a type of artificial intelligence that can perform a wide range of intelligent tasks at the human level or even surpass it. This is in contrast to highly specialized AI, which is designed for specific tasks. Building an AGI is one of the main goals of AI research and companies like Open AI. The time to reach AGI is the subject of discussions withered researchers and experts.”

However, the original query also has a significant impact on the result.

I will also give you some examples of the Q & A bundle for LLM YandexGPT 3 Pro.

Question 1: “Hi. What is strong AI or AGI?”

Answer 1: “Hi! Strong AI, also known as AGI (Artificial General Intelligence), is the concept of creating artificial intelligence that can perform any intellectual tasks available to humans, but not necessarily in the same way as a human does. Unlike weak AI, which is designed to perform specific tasks, strong AI tends to mimic human intelligence in general, including analytical thinking and learning abilities”.”

Question 2: “AGI decryption”.

Answer 2: “AGI — Artificial General Intelligence) is the concept of creating artificial intelligence that will be able to solve a wide range of tasks at the level of human intelligence”.”

Question 3: “What is the explanation of the term AGI and its description?”

Answer 3: “AGI (Artificial General Intelligence) is a strong general — level artificial intelligence capable of thinking and acting like a human. They can make independent conclusions, learn, and possibly become self-aware at some point in time. Unlike Narrow AI, AGI is the entire brain capable of solving a set of tasks”.”

As you can see, a lot depends on the request. And this is one of the key limitations of generative AI (GII), but we’ll talk about it in the next chapter.

Now I will give the definition that, in my opinion, most accurately defines its essence.

Strong or general AI (AGI) is an AI that can navigate changing conditions, model and predict the development of a situation. And if the situation goes beyond the standard algorithms, then find a solution yourself. For example, to solve the problem “go to university” and learn the rules of the game of checkers, and instead of chess, start playing checkers.

What qualities should such an AI have?

Thinking is the use of methods such as deduction, induction, association, etc., which are aimed at extracting facts from information, their presentation (preservation). This will make it possible to solve problems more accurately in conditions of uncertainty

Memory is the use of various types of memory (short-term, long-term). That is, the tasks should be solved taking into account the accumulated experience. Now, if you chat with ChatGPT 4, you will see that the algorithm has a small short-term memory and after a while forgets where it all started. In general, in my opinion, the issue of memory and the “massiveness” of AI models will be a key limitation in the development of AI. More on this below.

Planning — tactical and strategic. Yes, there are already studies that claim that AI can plan its actions and even deceive a person to achieve its goals. But now it’s still only in its infancy. The deeper the planning goes, especially in conditions of uncertainty, the more capacity is needed. After all, it’s one thing to plan a game of chess 3—6 steps deep, where all the rules are clear, and another in a situation of uncertainty.

Training — simulating the actions of another object and learning through experimentation. Now he is learning from large amounts of data, but he does not model or conduct experiments himself. Although we do not fully understand how the same Chat GPT works, and this is one of the main problems, learning requires the formation of long-term memory and complex relationships. And this, as we understand it, is a problem for AI.

And now no one has such a strong AI right now. And the statement about the imminent (in the2024—2028horizon) appearance of a strong AI, in my opinion, is erroneous or speculative. Although maybe, I have too limited knowledge…

Yes, ChatGPT from Open AI and other LLMs can generate text / illustrations / videos through query analysis and big data processing. But they only broadcast what the creators gave them for training. They search for the most appropriate combinations of words and sentences, words and images for the query, and in general, associative combinations. But don’t be under any illusions, this is just mathematics and statistics. And in their answers, there are a lot of “marriage” and “hallucinations”. They are not yet ready for real interaction with the world.

Here is a simple LLM-based example.

I — come from a project management background, and as part of my work, I decided to write an article about why Agile is not a universal approach to project management, and why, if applied correctly, any company and team should move closer to hybrid or cascading approaches.

My assistants in writing text were all the same ChatGPT, GigaChat, YandexGPT and AI Search.

I formulated a simple request: “Write a post about why Agile is infinitely wrong to apply. Emphasize that conducting retrospectives should lead away from the situation of uncertainty to the situation of complex ordered or simple ordered systems.”

ChatGPT4’s answer: “Agile is an approach to software development that is based on iterative and incremental product development, collaboration between self-organizing teams, and continuous process improvement. One of the key principles of Agile is to conduct regular retrospectives, i.e., sessions where the team analyzes its work, identifies problems and finds ways to solve them.

Retrospectives are a powerful tool for improving the efficiency and quality of work, but only if they are conducted correctly. Unfortunately, many teams make the mistake of applying Agile indefinitely, without taking into account the context and changes in the environment. This leads to the fact that retrospectives become useless, boring and demotivating.

To avoid this, you need to understand that Agile is not a universal solution for every situation. Depending on the degree of uncertainty and complexity of the project, the team may be in one of four zones: chaos, complexity, order, or simplicity. These zones can be represented as a matrix, where the degree of uncertainty is plotted horizontally, and the –degree of complexity is plotted vertically.

!Matrix of zones (the chatbot’s editorial staff, which is exactly how he designated this area)

Each zone requires a different approach to retrospectives and improvements. In the chaos zone, the team faces unpredictable and extreme situations that require rapid response and adaptation. In this case, retrospectives should be short, frequent, and focused on survival and stabilization. In the difficulty zone, the team works with unclear and dynamic requirements that require creativity and experimentation. In this case, retrospectives should be long, sparse, and focused on learning and innovation. In the order zone, the team works with clear and stable requirements that require discipline and optimization. In this case, retrospectives should be average, regular, and focused on efficiency and quality. In the simplicity zone, the team works with obvious and simple requirements that require minimal effort and attention. In this case, retrospectives can be omitted or conducted as necessary.

However, it is important to understand that zones are not static and can change over time. Therefore, the team must constantly evaluate its context and adapt its approach to retrospectives. The purpose of retrospectives is not to apply Agile indefinitely, but to lead the team from a situation of uncertainty to a situation of complex ordered or simple ordered systems. Only then will retrospectives bring benefits and pleasure to the team and the project”.”

Answer GigaChat: “Agile is a software development methodology that has become very popular in recent years. It offers a flexible approach to project management that allows you to quickly respond to changes and adapt to new requirements. However, the endless application of Agile can lead to problems.

One of the main reasons why Agile is infinitely wrong to apply is that it can lead to a situation of uncertainty. In Agile projects, we often encounter changes in requirements and priorities, which can cause uncertainty about what needs to be done next.

Conducting retrospectives is one way to deal with this uncertainty. Retrospectives help the team understand what was done right and what needs to be improved in the future. They also help the team move from a situation of uncertainty to a situation of complex ordered or simple ordered systems.

However, if we endlessly apply Agile without doing retrospectives, then we may be left in a situation of uncertainty. This can lead to the team spending a lot of time fixing bugs and adapting to new requirements, rather than creating new functionality.

In addition, the endless use of Agile can lead to the fact that the team will constantly work on small tasks and will not have time to create large functional blocks. This may cause the project to develop slowly and not meet the customer’s expectations.

In general, Agile is a great approach to project management, but its endless application can lead to problems. Conducting retrospectives helps the team move from a situation of uncertainty to a situation of complex ordered or simple ordered systems and improve the quality of the team’s work.”

Yandex GPT2’s answer: “The endless use of Agile can lead to incorrect decisions and product quality problems. It is important to understand that Agile is not a universal solution for all projects and teams, and its constant use can lead to loss of efficiency and productivity.

One of the key principles of Agile is to conduct retrospective sessions that help the team analyze their past successes and failures. However, if these retrospectives are held constantly, it can lead the team away from solving real problems and tasks.

Instead of helping the team develop and become more effective, constant retrospectives can lead to a situation where the team is constantly discussing the same problems and cannot move forward. This can create a situation of uncertainty when the team does not know what to do next, or vice versa, it can lead to the creation of complex and disordered systems that only complicate the work process.

To avoid this, it is important to determine when and how often to conduct retrospective sessions. They should focus on analyzing specific issues and opportunities for improvement, rather than discussing the same issues over and over again.

It’s also important to understand that each team and project is unique, and what works for one team may not work for another”.”

YandexGPT 3 Pro: “Why Agile might Agile be the wrong choice?

Agile is a project management methodology that involves an iterative approach to software development. It includes regular team meetings, constant interaction with the customer, and readiness for changes.

However, using Agile is not always the right solution. In some cases, the use of this methodology can lead to negative consequences.

Here are a few reasons why Agile might be the wrong choice:

Not suitable for large projects. Agile is best suited for small projects where you can quickly respond to changes. In large projects that require more careful planning and coordination, Agile can lead to chaos and loss of control.

Not suitable for projects with clear requirements. If the project has clear requirements that are not subject to change, Agile can lead to unnecessary spending of time and resources.

Not suitable for projects with a long development cycle. Agile involves short development cycles that allow you to respond quickly to changes. In projects with a long development cycle, this may cause the project to constantly change and make it impossible to complete.

It is important to understand that Agile is not a universal methodology that is suitable for all projects. The choice of methodology should be based on the specifics of the project, its goals and objectives.

How to avoid negative consequences of using Agile?

To avoid negative consequences of using Agile, it is necessary to carefully analyze the project and determine whether this methodology is suitable for its implementation. If you decide to use Agile, you must follow the following rules:

Conduct regular retrospectives. Retrospectives allow the team to analyze their work and identify weaknesses. This helps you avoid repeating mistakes and improve your work efficiency.

Set clear goals and deadlines. This will help the team focus on completing tasks and avoid wasting time.

Maintain communication with the customer. This will allow you to receive timely feedback and make necessary changes to the project.

Conducting retrospectives should lead away from the situation of uncertainty to the situation of complex ordered or simple ordered systems. This will allow the team to analyze their work, identify weaknesses and take measures to eliminate them.

In conclusion, Agile is an effective methodology that can bring many benefits if applied correctly. However, its use requires careful planning and analysis”.”

AI Search’s response: “Hello, friends! Today I would like to share my thoughts on why Agile, despite its popularity and effectiveness, is often used incorrectly. I will pay particular attention to why conducting retrospectives is an important tool for moving from a situation of uncertainty to orderly or complex systems.

Agile is a philosophy and approach to software development that changes the traditional approach to project management, making it more flexible and adaptive to change. However, despite its obvious advantages, Agile can be applied incorrectly, and as a result, its potential is not fully revealed.

One of the main problems of using Agile incorrectly is the lack of regular retrospectives. A retrospective, as an expert assessment of past work, helps teams take into account the changes, shortcomings, and improvements that have occurred when planning future iterations. This allows you to hone the work process and increase efficiency. However, some teams often ignore conducting retrospectives or conduct them unsystematically.

By not conducting regular retrospectives, teams miss opportunities to see their issues, validate or change their vision, and customize development milestones. As a result, teams may be stuck in a situation of uncertainty and unable to move forward.

One of the main principles of Agile is to ensure a fast response to changes and continuous improvement of the work process. If retrospectives are not conducted regularly, teams are not sufficiently aware of their mistakes and omissions, which makes it difficult to progress towards achieving order and efficiency.

In addition, conducting regular retrospectives helps teams move from a situation of uncertainty to complex or simple ordered systems. Starting with simple, streamlined systems where rules and processes are easily defined and controlled, teams can gradually move to more complex systems where rules are more ambiguous and require more adaptation.

So, flashbacks are an important part of the Agile process and help teams move from uncertainty to order and complex systems. They allow teams to recognize their mistakes and shortcomings, as well as see areas for improvement. Do not neglect regular retrospectives so that your team can maximize the potential of Agile and achieve high performance.

I wish you successful work in applying Agile and continuous improvement of your processes! Be flexible and persistent and considerate”.”

Well, and my post: “Why is Agile not eternal and not a panacea?”

“In recent years, everyone declares that they are Agile.

Let’s be honest: most people hide banal chaos under this. After all, Agile is not about the lack of planning. In addition, he is very sensitive to the implementation of certain rules and to the stability of the team, its motivation. If you don’t meet these conditions, don’t use Agile in the future.

And using the same Scrum, Kanban or other approaches should lead to the absence of the need to implement Agile projects.

But why?

Let’s remember that Agile was originally designed to work and implement projects in a highly uncertain environment.

There is even a special tool — model Kenevin, that helps you understand what situation you are in and what approach you need to choose, what to focus on. So, in ordered systems (simple or complex situations), Agile, on the contrary, is contraindicated, because it reduces the cost of achieving results. That is, Agile is effective in cases where you need to do — I don’t know what”. But it’s not about efficiency.

Now let’s take a look at the retrospective. All approaches within Agile involve regular retrospectives, analysis of their work, and interaction with the client / customer / partner. In other words, the very logic of these tools is to get away from an uncertain situation and learn how to predict them and become more effective.

If you constantly (every six-months or a year) change jobs or constantly launch new products, and do not replicate certain solutions (which is strange for a business), then yes, you need to be Agile.

But if you have a segment in the AC, and you have worked with experience and expertise on typical approaches / products that need to be adjusted and adapted in a small part, then sooner or later you must move away from Agile and come to an ordered system where cascading or hybrid approaches are needed. These retrospectives should lead you to understand what customers want in 90%of cases and how the organization works.

As a result, if you are Agile on an ongoing basis and everywhere, and not for the period of rebuilding / launching / adapting, then this may indicate that:

— you are not following the Agile tools;

— you haven’t found your product or niche, and you haven’t developed the necessary expertise;

— you always have a unique product / project (which should be reflected in the high price of your services);

— the organization is “sick” from the inside, and this way you mask high turnover, lack of work on processes, etc.

What do you think about it?”

The quality of neural network responses is more like a weak student who just picks up similar words, but doesn’t understand what he’s talking about. Could I get a similar post from the neural network? So that she can convey my meaning? Yes, of course, but I wrote my post in 25 minutes, and how long would it take me to try to achieve such a result from AI? And does it look like a really thinking intelligence? And in general, on Intelligence?

The fact is that our thinking is strongly tied to ideas and images. That is, basically in thinking we use an approach from the general to the particular, we think in images, and then from some vague, but understandable to us at the unconscious level of abstractions we come to the smallest details. In parallel, we conduct a bunch of associations, completely tied again to images. It is not for nothing that there are so many metaphors, comparisons, allusions and word changes in human speech.

Modern neural networks “think” quite differently. They simply do not know the concepts of “meaning”, “essence”, “idea”, as well as the process of reflection itself. The texts generated by the neural network look logical not because the neural network really understands what it is writing about, but because it is able to calculate which words are most often next to each other in the texts available for its training on a given topic. Pure mathematics based on calculating probabilities.

However, for the sake of justice, we should also note significant progress in this direction. Now developers are trying to teach AI to think: it asks itself questions and, giving answers, draws conclusions. This is an imitation of an internal dialog to improve the quality of content, which is typical for models from Open AI at the end of 2024 and Yandex GPT 4 Pro RC.

Here is an example of the response of the Yandex GPT 4 Pro RC model to the request” Write a post about why Agile is infinitely applied incorrectly. Emphasize that conducting retrospectives should lead away from the situation of uncertainty to the situation of complex ordered or simple ordered systems.”

Yandex GPT 4 Pro RC Response: “Why Agile doesn’t Always Work: how retrospectives can help move from uncertainty to order.

Agile is a flexible approach to project management that allows you to quickly adapt to changes and improve processes based on feedback. However, despite all the advantages, applying Agile without considering the context can lead to problems. In this post, we will look at why Agile should not be applied indefinitely and how retrospectives help to move from a situation of uncertainty to ordered systems.

Problems of infinite Agile Application

1. Lack of clear structure. Agile is based on the principles of flexibility and adaptability, which can lead to a lack of a clear structure and action plan. This can make it difficult to make decisions and manage risks.

2. Complexity of evaluating results. Without a clear plan and criteria for evaluating results, it is difficult to determine how well the project is progressing. This may raise doubts about the effectiveness of the approach.

3. Risk of losing control. The endless use of Agile can lead to a loss of control over the project. Without clear boundaries and constraints, the team may lose focus and start to deviate from the original goal.

4. Inefficient use of resources. If the team is constantly adapting to new conditions, this can lead to inefficient use of resources. Instead of focusing on key tasks, the team will spend time making constant changes.

5. Reduced team motivation. Constant adaptation and changes can cause stress and fatigue for the team. This can reduce motivation and productivity.

How retrospectives help you move to streamlined systems

Retrospectives are one of the key Agile tools that help the team analyze their work and identify areas for improvement. They allow us to move from a situation of uncertainty to more ordered systems, where processes and decisions become more predictable and manageable.

Here are some ways that flashbacks can help:

— Identifying problems and their solutions. Retrospectives allow you to identify problems that have arisen in the course of work, and find ways to solve them. This helps you avoid making mistakes again in the future.

— Improve processes. Retrospectives help you understand which processes are working well and which need to be improved. This allows you to optimize your work processes and increase efficiency.

— Increase motivation. When a team sees the results of their efforts and understands that they bring benefits, it increases motivation and job satisfaction.

— Create a culture of continuous improvement. Retrospectives foster a culture in which continuous improvement and adaptation are the norm. This helps the team to be more flexible and ready for changes.

In general, retrospectives are an important tool for moving from a situation of uncertainty to more structured and orderly processes. They help the team adapt to changes, improve their processes, and achieve their goals”.”

Yes, the model has become academic and it is clear that these are excerpts from articles, and not reflections. But this is a huge progress, and you can already work with the model and use it as a tool.

However, with only weak AI available and dreaming of a more or less strong one, various researchers already distinguish super-strong AI (ASI, Artificial Superintelligence) in their classification.

This is about an AI, that:

— It can solve both routine and creative tasks;

— instantly navigates uncertainty even without a network or internet connection;

— adapts problem solving to the context of circumstances and available opportunities / resources;

— understands people’s emotions (not only through a text query, but also based on the analysis of facial expressions, voice timbre, and other parameters) and takes them into account in your work;

— able to interact independently with the real world to solve problems.

This is the kind of AI that we only see in science fiction movies so far. Even AI itself writes about ASI as a “hypothetical concept” and “a subject of science fiction and active research in the field of artificial intelligence” (YandexGPT 3 Pro). This is a certain desired point in the distant future, which is not yet possible to reach.

Chat GPT 4 describes ASI as follows: “hypothetical artificial intelligence system with intelligence superior to human. This is not just an advanced AI, but a system that can adapt, learn and develop independently, exceeding human abilities in learning, problem solving, cognitive functions and self-improvement.

Super-strong AI, or ASI, will be able to understand and process many types of data (text, images, sound, video), which will allow it to perform verbal tasks and make decisions. It will use advanced AI technologies such as multidimensional language models (LLMs), multi-bit neural networks, and evolutionary algorithms.

Currently, ASI remains a conceptual and speculative stage in the development of AI, but it represents a significant step forward from the current level of AI”.”

And if there are hundreds of weak AIS now, for each task, then there will be only dozens of strong AIS (most likely there will be a division in directions, we will consider this in the next block), and the super-strong AI will be one for the state and even the entire planet.

Limitations on the path to strong AI

To be honest, I have little faith in the rapid emergence of a strong or super-strong AI.

First, this is a very costly and complex task from the point of view of regulatory restrictions. The era of uncontrolled AI development is ending. More and more restrictions will be imposed on it. We’ll discuss AI regulation in a separate chapter.

The key trend is a risk-based approach. So, in a risk-based approach, strong and super -strong AI will be at the upper level of risk. This means, that legislative measures will also be protective.

Secondly, this is a difficult task from a technical point of view, and a strong AI will be very vulnerable.”

Now, in the mid-2020s, creating and training a strong AI requires huge computing power. So, according to Leopold Aschenbrenner, a former Open AI employee from the Super alignment team, it will require the creation of a data-center worth a trillion US dollars. And its power consumption will exceed all current electricity generation in the United States.

We also need complex AI models (orders of magnitude more complex than the current ones) and a combination of them (not just LLM for query analysis). In other words, it is possible to exponentially increase the number of neurons, build connections between neurons, and coordinate the work of various segments.

At the same time, it should be understood that if human neurons can be in several states, and activation can occur “in different ways” (biologists will forgive me for such simplifications), then machine AI is a simplified model that does not know how to do this. Simply put, machine’s 80—100 billion neurons are not equal to a human’s 80—100 billion. The machine will need more neurons to perform similar tasks. The same GPT4 is estimated at 100 trillion parameters (conditionally neurons), and it is still inferior to humans.

All this leads to several factors.

The first factor is that increasing complexity always leads to reliability problems, and the number of failure points increases.

Complex AI models are difficult to both create and maintain from degradation over time, in the process of operation. AI models need to be constantly “serviced”. If this is not done, then a strong AI will begin to degrade, and neural connections will be destroyed, this is a normal process. Any complex neural network, if it is not constantly developing, begins to destroy unnecessary connections. At the same time, maintaining relationships between neurons is a very expensive task. AI will always optimize and search for the most efficient solution to the problem, which means, that it will start turning off unnecessary energy consumers.

That is, the AI will look like an old man with dementia, and the “life” period will be greatly reduced. Imagine what a strong AI with its capabilities can do, but which will suffer from memory loss and sharp reversals to the state of the child? Even for current AI solutions, this is an actual problem.

Let’s give a couple of simple real-life examples.

You can compare building a strong AI to training your human muscles. When we first start working out in the gym and get involved in strength training, bodybuilding, then progress is fast, but the further we go, the lower the efficiency and increase in results. You need more and more resources (time, exercise, and energy from food) to progress. Yes, even just holding the form is becoming more and more difficult. Plus, the increase in strength comes from the thickness of the muscle section, but the mass grows from the volume. As a result, the muscle will at some point become so heavy that it will not be able to move itself, and may even damage itself.

Another example of the complexity of creating, but already from the field of engineering, is Formula 1 races. For example, a 1-second lag can be eliminated if you invest 1 million and 1 year. But to win back the crucial 0.2 seconds, it may already take 10 million and 2 years of work. And the fundamental limitations of the design of the car can force you to reconsider the whole concept of a racing car.

And even if you look at ordinary cars, everything is exactly the same. Modern cars are more expensive to create and maintain, and without special equipment, it is impossible to change even a light bulb. If you take modern hyper cars, then after each departure, entire teams of technicians are required for maintenance.

If you look at it from the point of view of AI development, there are two key parameters in this area:

— number of layers of neurons (depth of the AI model).

— the number of neurons in each layer (layer width).

Depth determines how great the AI’s ability to abstract is. Insufficient depth of the model leads to a problem with the inability to perform deep system analysis, superficiality of this analysis and judgments.

The width of the layers determines the number of parameters / criteria that the neural network can use on each layer. The more they are, the more complex models are used and the more complete reflection of the real world is possible.

However, if the number of o layers has a linear effect on the function, then the width does not. As a result, we get the same analogy with muscle — the size of top AI models (LLM) exceeds a trillion parameters, but models 2 orders of magnitude smaller do not have a critical drop in performance and quality of responses. More important is what data the model is trained on and whether it has a specialization.

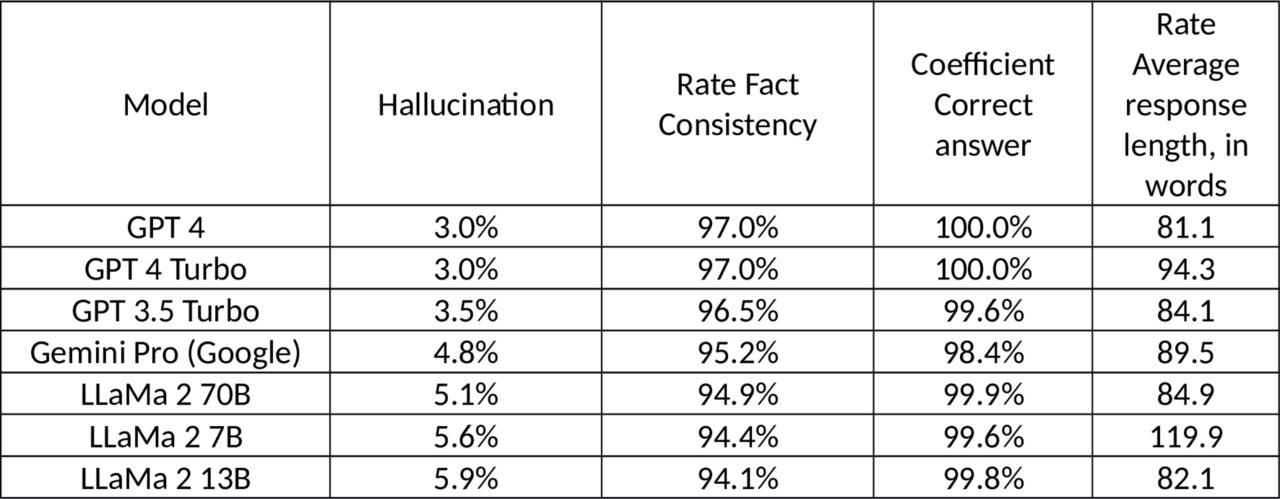

Below are statistics for LLM models from different manufacturers.

Compare the indicators LLaMa 2 70B, LLaMa 2 7B, LLaMa 2 13B. Indicators 70B, 7B and 13B conditionally demonstrate the complexity and training of models — the higher the value, the better. But as we can see, the quality of responses does not radically change, while the price and labor costs for development increase significantly.

And we can see how leaders are building up computing power, building new data centers and hurriedly solving energy supply and cooling issues for these monsters. At the same time, improving the quality of the model by a conditional 2% requires an increase in computing power by an order of magnitude.

Now a practical example to the question of maintenance and maintenance due to degradation. Tut will also be noticeable about the effect of people. Any AI, especially at an early stage, will learn based on feedback from people (their satisfaction, initial requests and tasks). For example, the same ChatGPT4 uses user requests to retrain its model in order to give more relevant answers and at the same time reduce the load on the “brain”. And at the end of 2023, there were articles that the AI model has become “more lazy”. The chatbot either refuses to answer questions, interrupts the conversation, or simply responds with excerpts from search engines and other sites. And by mid-2024, this has already become the norm, when the model simply cites excerpts from Wikipedia.

One possible reason for this is the simplification of the user requests themselves (they are becoming more primitive). After all, LLMs do not invent anything new, these models try to understand what you want them to say and adapt to it (in other words, they also form stereotypes). It also looks for the maximum efficiency of the labor-result bundle, “disabling” unnecessary neural connections. This is called function maximization. Just math and statistics.

Moreover, this problem will be typical not only for LLM.

As a result, to prevent the AI from becoming degraded, you will have to load it with complex research, while limiting its load to primitive tasks. And once it is released into the open world, the ratio of tasks will be in favor of simple and primitive user requests or solving applied problems.

Remember yourself. Do you really need to evolve to survive and reproduce? Or what is the correlation between intellectual and routine tasks in your work? What level of math problems do you solve in this job? Do you need integrals and probability theory, or just math up to 9th grade?

The second factor is the amount of data and hallucinations.

Yes, we can increase the current models by XXXX times. But the same ChatGPT5 prototype already lacks training data in 2024. They gave him everything, they had. And with a modern AI that will navigate uncertainty, there simply won’t be enough data at the current level of technology development. You need to collect metadata about user behavior, think about how to circumvent copyright and ethical restrictions, and collect user consent.

In addition, using the current LLMs as an example, we can see another trend. The more “omniscient” a model is, the more inaccuracies, errors, abstractions, and hallucinations it has. At the same time, if you take a basic model and give it a specific subject area as knowledge, then the quality of its responses increases: they are more objective, she fantasizes less (hallucinates) and makes fewer mistakes.

The third factor is vulnerability and costs.

As we discussed above, we will need to create a data-center worth a trillion US dollars. And its power consumption will exceed all current electricity generation in the United States. This means, that the creation of an energy infrastructure with a whole complex of nuclear power plants will also be required. Yes, windmills and solar panels can’t solve this problem.

Now let’s add that the AI model will be tied to its “base”, and then one successful cyber-attack on the energy infrastructure will de-energize the entire “brain”.

And why should such an AI be tied to the center, why can’t it be distributed?

First, distributed computing still loses performance and efficiency. These are heterogeneous computing capacities that are also loaded with other tasks and processes. In addition, a distributed network cannot guarantee the operation of computing power all the time. Something turns on, something turns off. The available power will be unstable.

Secondly, it is a vulnerability to attacks on communication channels and the same distributed infrastructure. Imagine that suddenly 10% of the neurons in your brain just turned off (blocking communication channels or just turned off due to an attack), and the rest are working half-heartedly (interference, etc.). As a result, we again have the risk of a strong AI that forgets who it is, where it is, for something, then just thinks for a long time.

And if everything comes to the point that a strong AI will need a mobile (mobile) body to interact with the world, then this will be even more difficult to implement. After all, how to provide all this with energy and cool it? Where do I get data processing power? Plus, you also need to add machine vision and image recognition, as well as processing other sensors (temperature, hearing, etc.). This is huge computing power and the need for cooling and energy.

That is, it will be a limited AI with a permanent wireless connection to the main center. And this is again a vulnerability. Modern communication channels give a higher speed, but this affects the reduction of range and penetration, vulnerability to electronic warfare. In other words, we get an increase in the load on the communication infrastructure and an increase in risks.

Here, of course, you can object. For example, the fact that you can take a pre-trained model and make it local. In much the same way as I suggest deploying local AI models with “additional training” in the subject area. Yes, in this form, all this can work on the same server. But such an AI will be very limited, it will be “stupid” in conditions of uncertainty, and it will still need energy and a connection to the data transmission network. That is, this story is not about the creation of human-like super-beings.

All this leads to questions about the economic feasibility of investing in this area. Especially considering two key trends in the development of generative AI:

— creating cheap and simple local models for solving specialized tasks;

— create AI orchestrators that will decompose a request into several local tasks and then redistribute it between different local models.

Thus, weak models with narrow specialization will remain more free and easier to create. At the same time, they will be able to solve our tasks. And as a result, we have a simpler and cheaper solution to work tasks than creating a strong AI.

Of course, we leave out neuromorphic and quantum systems, but we will discuss this topic little later. And, of course, there may be mistakes in my individual figures and arguments, but in general I am convinced that strong AI is not a matter of the near future.

In summary, strong AI has several fundamental problems.

— Exponential growth in the complexity of developing and countering degradation and complex models.

— Lack of data for training.

— Cost of creation and operation.

— Attachment to data centers and demanding computing resources.

— Low efficiency of current models compared to the human brain.

It is overcoming these problems that will determine the further vector of development of the entire technology: either a strong AI will still appear, or we will move into the plane of development of weak AI and AI orchestrators, which will coordinate the work of dozens of weak models.

But now strong AI does not fit in with ESG in any way, environmentalists like it commercial. Its creation is possible only within the framework of strategic and national projects financed by the state. And here is one of the interesting facts in this direction: the former head of the US National Security Agency (until 2023a), retired General, Paul Nakasone joined the board of directors of Open AI in 2024. The official version is for organizing Chat GPT security.

I also recommend reading the document titled “Situational Awareness: The Coming Decade”. Its author is Leopold Aschenbrenner, a former Open AI employee from the Super alignment team. The document is available by QR-code and hyperlink.

A shortened analysis of this document is also available using the QR-code and hyperlink below.

To simplify it completely, the author’s key theses are:

— By 2027, strong AI (AGI) will become a reality.

I disagree with this statement. My arguments are given above, plus some of the theses below and risk descriptions from the authors say the same. But again, what is meant by the term AGI? I have already given my own definition, but there is no single term.

— AGI is now a key geopolitical resource. We forget about nuclear weapons; this is the past. Each country will strive to get AGI first, as in its time an atomic bomb.

The thesis is controversial. Yes, this is a great resource. But it seems to me that its value is overestimated, especially given the complexity of its creation and the mandatory future errors in its work.

— Creating an AGI will require a single computing cluster worth a trillion US dollars. Microsoft is already building one for Open AI.

Computing power also requires spending on people and solving fundamental problems.

— This cluster will consume more electricity than the entire US generation.

We discussed this thesis above. More than a trillion dollars is also invested in electricity generation, and there are also risks.

— AGI funding will come from tech giants — already Nvidia, Microsoft, Amazon, and Google are allocating $100 billion a quarter to AI alone.

I believe that government funding and, consequently, intervention is essential.

— By 2030, annual investment in AI will reach $8 trillion.

Excellent observation. Now the question arises, is this economically justified?

Despite all the optimism of Leopold Aschenbrenner regarding the timing of the creation of AGI, he himself notes a number of limitations:

— Lack of computing power for conducting experiments.

— Fundamental limitations associated with algorithmic progress

— Ideas are becoming more complex, so it is likely that AI researchers (AI agents who will conduct research for people) will only maintain the current rate of progress, and not increase it significantly. However, Aschenbrenner believes that these obstacles can slow down, but not stop, the growth of AI systems ' intelligence.

Chapter 3. What can weak AI do and general trends?

Weak AI in applied tasks

As you, probably already, understood, I am a proponent of using what is available. Perhaps this is my experience in crisis management, and whether it’s just an erroneous opinion. But still, where can the current weak AI based on machine learning be applied?

The most relevant areas for applying AI with machine learning are:

— forecasting and preparing recommendations for decisions taken;

— analysis of complex data without clear relationships, including for forecasting and decision-making;

— process optimization;

— image recognition, including images and voice recordings;

— automating the execution of individual tasks, including through content generation.

The new direction, which is at its peak of popularity in 2023—2024, is image recognition, including images and voice recordings, and content generation. This is where the bulk of AI developers and most of these services come from.

At the same time, the combination of AI + IoT (Internet of Things) deserves special attention:

— AI receives pure big data, which does not contain human errors, for training and finding relationships.

— The effectiveness of IoT increases, as it becomes possible to create predictive analytics and early detection of deviations.

Key trends

— Machine learning is moving towards an increasingly low entry threshold.

One of the tasks that developers are currently solving, is to simplify the creation of AI models to the level of site designers, where special knowledge and skills are not needed for basic application. The creation of neural networks and data science is already developing according to the “service as a service” model, for example, DSaaS — Data Science as a Service.

You can start learning about machine learning with AUTO ML, its free version, or DSaaS with initial audit, consulting, and data markup. You can even get data markup for free. All this reduces the entry threshold.

— Creating neural networks that need less and less data for training.

A few years ago, to fake your voice, it was necessary to provide a neural network with one or two hours of recording your speech. About two years ago, this indicator dropped to a few minutes. Well, in 2023, Microsoft introduced a neural network that takes just three seconds to fake.

Plus, there are tools, that you can use to change your voice even in online mode.

— Create support and decision-making systems, including industry-specific ones.

Industry-specific neural networks will be created, and the direction of recommendation networks, so-called “digital advisors” or solutions of the class “support and decision-making systems (DSS) for various business tasks” will be increasingly developed.

Practical example

We will look at this case again and again, as it is my personal pain and the product I am working on.

There is a problem in project management — 70% of projects are either problematic or fail:

— the average excess of planned deadlines is observed in 60% of projects, and the average excess is 80% of the original deadline;

— 57% of projects exceed their budgets, while the average excess is 60% of the initial budget;

— failure to meet the success criteria — in 40% of projects.

At the same time, project management already takes up to 50% of managers ' time, and by 2030 this figure will reach 60%. Although at the beginning of the 20th century, this figure was 5%. The world is becoming more and more volatile, and the number of projects is growing. Even sales are becoming more and more “project-based”, that is, complex and individual.

And what does such project management statistics lead to?

— Reputational losses.

— Penalties.

— Reduced marginality.

— Limiting business growth.

The most common and critical errors are:

— unclear formulation of project goals, results, and boundaries;

— insufficiently developed project implementation strategy and plan;

— inadequate organizational structure of project management;

— an imbalance in the interests of project participants;

— ineffective communication within the project and with external organizations.

How do people solve this problem? Either they don’t do anything and suffer, or they go to school and use task trackers.

However, both approaches have their pros and cons. For example, classical training provides an opportunity to ask questions and practice various situations during live communication with the teacher. At the same time, it is expensive and usually does not imply further support after the end of the course. Task trackers, on the other hand, are always at hand, but they do not adapt to a specific project and company culture, do not contribute to the development of competencies, but on the contrary, are designed to monitor work.

As a result, after analyzing my experience, I came up with the idea of a digital advisor — artificial intelligence and predictive recommendations “what to do, when and how” in 10 minutes for any project and organization. Project management becomes available to any manager conditionally for a couple of thousand rubles a month.

The AI model includes a project management methodology and sets of ready-made recommendations. The AI will prepare sets of recommendations and gradually learn itself, finding new patterns, and not be tied to the opinion of the creator and the one who will train the model at the first stages.

Chapter 4. Generative AI

What is generative artificial intelligence?

Earlier, we reviewed the key areas for applying AI:

— forecasting and decisions-making;

— analysis of complex data without clear relationships, including for forecasting purposes.

— process optimization;

— image recognition, including images and voice recordings.

— content generation.

The areas of AI that are currently at the peak of popularity, are image recognition (audio, video, numbers) and content generation based on them: audio, text, code, video, images, and so on. Generative AI also includes digital Expert Advisors.

Generative AI Challenges

As of mid-2014, the direction of generative AI cannot be called successful. For example, in 2022, Open AI suffered a loss of $540 million due to the development of ChatGPT. And for further development and creation of a strong AI, about $ 100 billion more will be needed. This amount was announced by the head of Open AI himself. The same unfavorable forecast for 2024 is also given by the American company CCS Insight.

For reference: the operating cost of Open AI is $ 700,000 per day to maintain the chat bot ChatGPT.

The general trend is supported by Alexey Vodyasov, Technical Director of SEQ: “AI does not achieve the same marketing results that we talked about earlier. Their use is limited by the training model, and the cost and volume of data for training is growing. In general, the hype and boom are inevitably followed by a decline in interest. AI will come out of the limelight as quickly as it entered, and this is just the normal course of the process. Perhaps not everyone will survive the downturn, but AI is really a” toy for the rich”, and it will remain so in the near future.” And we agree with Alexey, after the hype at the beginning of 2023, there was a lull by the fall.

Adding to the picture is an investigation by the Wall Street Journal, according to which the majority of IT giants have not yet learned how to make money on the capabilities of generative AI. Microsoft, Google, Adobe and other companies that invest in artificial intelligence, are looking for ways to make money on their products. Here are some examples:

— Google plans to increase the subscription price for AI-enabled software;

— Adobe sets limits on the number of requests to services with AI during the month.

— Microsoft wants to charge business customers an additional $30 per month for the ability to create presentations using a neural network.

Well, and the icing on the cake-calculations by David Cahn, an analyst at Sequoia Capital, showing that AI companies will have to earn about $600 billion a year to offset the costs of their AI infrastructure, including data centers. The only, one who now makes good money on AI, is the developer of Nvidia accelerators.

More information about the article can be found in the QR-code and hyperlink below.

Computing power is one of the main expenses when working with Gen AI: the larger the server requests, the larger the infrastructure and electricity bills. Only suppliers of hardware and electricity benefit. So, Nvidia in August, 2023 earned about $5 billion thanks to sales of the accelerators for AI A100 and H100 only to the Chinese IT sector.

This can be seen in two examples on practice.

First is Zoom tries to reduce costs by using a simpler chatbot developed in-house and requiring less computing power compared to the latest version of ChatGPT.

Second is the most well-known AI developers (Microsoft, Google, Apple, Mistral, Anthropic, and Cohere) began to focus on creating compact AI models, as they are cheaper and more cost-effective.

Larger models, such, as Open AI’s GPT-4, which has more than 1 trillion parameters and is estimated to cost more than $ 100 million to build, do not have a radical advantage over simpler solutions in applications. Compact models are trained on narrower data sets and can cost less than $ 10 million, while using less than 10 billion parameters, but solve targeted problems.

For example, Microsoft introduced a family of small models called Phi. According to CEO Satya Nadella, the model’s solutions are 100 times smaller than the free version ChatGPT, of ChatGPT, but they handle many tasks almost as efficiently. Yusuf Mehdi, Microsoft’s chief commercial officer, said the company quickly realized that operating large AI models is more expensive than initially thought. So, Microsoft started looking for more cost-effective solutions.

Apple also plans to use such models to run AI directly on smartphones, which should increase the speed and security. At the same time, resource consumption on smartphones will be minimal.

Experts themselves believe that for many tasks, for example, summarizing documents or creating images, large models may generally be redundant. Ilya Polosukhin, one of the authors of Google’s seminal 2017 article on artificial intelligence, figuratively compared using large models for simple tasks to going to the grocery store on a tank. “Quadrillions of operations should not be required to calculate 2 +2,” he stressed.

But let’s look at everything in order, why did this happen and what restrictions threaten AI, and most importantly, what will happen next? Sunset of generative AI with another AI winter or transformation?

AI limitations that lead to problems

Earlier, I gave the “basic” problems of AI. Now, let’s dig a little deeper into the specifics of generative AI.

— Companies ' concerns about their data

Any business strives to protect its corporate data and tries to exclude it by any means. This leads to two problems.

First, companies prohibit the use of online tools that are located outside the perimeter of a secure network, while any request to an online bot is an appeal to the outside world. There are many questions about how data is stored, protected, and used.

Secondly, it limits the development of any AI at all. All companies from suppliers want IT solutions with AI-recommendations from trained models, which, for example, will predict equipment failure. But not everyone shares their data. It turns out a vicious circle.

However, here we must make a reservation. Some guys have already learned how to place Chat GPT level 3 — 3.5 language models inside the company outline. But these models still need to be trained, they are not ready-made solutions. And internal security services will find the risks and be against it.

— Complexity and high cost of development and subsequent maintenance

Developing any” general” generative AI is a huge expense-tens of millions of dollars. In addition, the am needs a lot of data, a lot of data. Neural networks still have low efficiency. Where 10 examples are enough for a person, an artificial neural network needs thousands, or even hundreds of thousands of examples. Although yes, it can find such relationships, and process such data arrays that a person never dreamed of.

But back to the topic. It is precisely because of the data restriction that ChatGPT also thinks “better” if you communicate with it in English, and not in Russian. After all, the English-speaking segment of the Internet is much larger than ours.

Add to this the cost of electricity, engineers, maintenance, repair and modernization of equipment, and get the same $ 700,000 per day just for the maintenance of Chat GPT. How many companies can spend such amounts with unclear prospects for monetization (but more on this below)?

Yes, you can reduce costs if you develop a model and then remove all unnecessary things, but then it will be a very highly specialized AI.

Therefore, most of the solutions on the market are actually GPT wrappers-add-ons to ChatGPT.

— Public concern and regulatory constraints

Society is extremely concerned about the development of AI solutions. Government agencies around the world do not understand what to expect from them, how they will affect the economy and society, and how large-scale the technology is in its impact. However, its importance cannot be denied. Generative AI is making more noise in 2023 than ever before. They have proven that they can create new content that can be confused with human creations: texts, images, and scientific papers. And it gets to the point where AI is able to develop a conceptual design for microchips and walking robots in a matter of seconds.

The second factor is security. AI is actively used by attackers to attack companies and people. So, since the launch of ChatGPT c, the number of phishing attacks has increased by 1265%. Or, for example, with the help of AI, you can get a recipe for making explosives. People come with original schemes and bypass the built-in security systems.

The third factor is opacity. Sometimes even the creators themselves don’t understand how AI works. And for such a large-scale technology, not understanding, what and why AI can generate, creates a dangerous situation.

The fourth factor is dependence on training resources. AI models are built by people, and it is also trained by people. Yes, there are self-learning models, but highly specialized ones will also be developed, and people will select the material for their training.

All this means that the industry will start to be regulated and restricted. No one knows exactly how. We will supplement this with a well-known letter in March 2023, in which well-known experts around the world demanded to limit the development of AI.

— Lack of the chatbot interaction model

I assume you’ve already tried interacting with chatbots and were disappointed, to put it mildly. Yes, a cool toy, but what to do with it?

You need to understand that a chatbot is not an expert, but a system that tries to guess what you want to see or hear, and gives you exactly that in the am.

And to get practical benefits, you must be an expert in the subject area yourself. And if you are an expert in your topic, do you need a Gen AI? And if you are not an expert, then you will not get a solution to your question, which means that there will be no value, only general answers.

As a result, we get a vicious circle — experts do not need it, and amateurs will not help. Then who will pay for such an assistant? So, at the exit we have only a toy.

In addition, in addition to being an expert on the topic, you also need to know, how to formulate a request correctly. And there are only a few such people. As a result, even a new profession appeared — industrial engineer. This is a person who understands how the machine thinks, and can correctly compose a query to it. And the cost of such an engineer on the market is about 6000 rubles per hour (60$). And believe me, they won’t find the right query for your situation the first time.

Do businesses need such a tool? Will the business want to become dependent on very rare specialists who are even more expensive than programmers, because ordinary employees will not benefit from it?

So, it turns out that the market for a regular chatbot is not just narrow, it is vanishingly small.

— The tendency to produce low-quality content, hallucinations

In the article Artificial intelligence: assistant or toy? I noted that neural networks simply collect data and do not analyze the facts, their coherence. That is, what is more on the Internet / database, they are guided by. They don’t evaluate what they write critically. In toga, GII easily generates false or incorrect content.

For example, experts from the Tandon School of Engineering at New York University decided to test Microsoft’s Copilot AI assistant from a security point of view. As a result, they found that in about 40% of cases, the code generated by the assistant contains errors or vulnerabilities. A detailed article is available here.

Another example of using Chat GPT was given by a user on Habre. Instead of 10 minutes and a simple task, we ended up with a 2-hour quest.

And AI hallucinations — have long been a well-known feature. What they are and how they arise, you can read here.

And this is good when the cases are harmless. But there are also dangerous mistakes. So, one user asked Gemini how to make a salad dressing. According to the recipe, it was necessary to add garlic to olive oil and leave it to infuse at room temperature.

While the garlic was being infused, the user noticed strange bubbles and decided to double-check the recipe. It turned out that the bacteria that cause botulism were multiplying in his bank. Poisoning with the toxin of these bacteria is severe, even sweeping away.

I myself periodically use GII, and more often it gives, let’s say, not quite correct results. And sometimes even frankly erroneous. You need to spend 10—20 requests with absolutely insane detail to get something sane, which then still needs to be redone / docked.

That is, it needs to be rechecked. Once again, we come to the conclusion that you need to be an expert in the topic in order to evaluate the correctness of the content and use it. And sometimes it takes even more time than doing everything from scratch and by yourself.

— Emotions, ethics and responsibility

A Gen AI without a proper query will tend to simply reproduce information or create content, without paying attention to emotions, context, and tone of communication. And from the series of articles about communication, we already know that communication failures can occur very easily. As a result, in addition to all the problems above, we can also get a huge number of conflicts.

There are also questions about the possibility of determining the authorship of the created content, as well as the ownership rights to the created content. Who is responsible for incorrect or malicious actions performed using the GII? And how can you prove that you or your organization is the author? There is a need to develop ethical standards and legislation regulating the use of GII.

— Economic feasibility

As we’ve already seen, developing high-end generative AI yourself can be a daunting task. And many people will have the idea: “Why not buy a ‘box’ and place it at home?” But how much do you think, this solution will cost? How much will the developer’s request?

And most importantly, how big should the business be to make it all pay off?

What should I do?